There is certainly a lot of talk about the benefits of using Vista, but a lot of administrators and users seem to be avoiding it and instead hold on to Windows XP – which now appears to have a better reputation than ever! Well, here is a small reason to upgrade to Vista or Windows Server 2008.

Microsoft introduced a new event, 4964, called the Special Groups Feature. The purpose of this feature is to log event 4964 to the security event log when a member of a group you specify logs on to a computer.

So let’s say you want to know when a member of a local Administrator group logs on to a computer (and with EventSentry you could get an email when that happens for example), then you can accomplish that with the special groups feature.

In order to use this feature you need to do three things:

- Determine the SID of the group(s) you want to monitor

- Specify the SID(s) of the groups you want to monitor in a registry key

- Ensure that you are auditing the Special Logon Feature (enabled by default)

One way to obtain the SID of a group is to use the getsid.exe tool which is part of the Windows XP SP2 Support Tools and other Microsoft Resource Kits. Note that the primary purpose of this tool is to compare the SID of two user accounts (so it requires you to specify two user/group accounts), but you can just enter the same group name twice to get around this. Here is an example output of the tool:

getsid \\mydc “Domain Admins” \\mydc “Domain Admins”

The SID for account BUILTIN\Domain Admins matches account BUILTIN\Domain Admins

The SID for account BUILTIN\Domain Admins is S-1-5-21-9817441204-4587651373-9817264971-512

The SID for account BUILTIN\Domain Admins is S-1-5-21-9817441204-4587651373-9817264971-512

As you can see you need to point to tool to computer where the group exists, in our case I used a domain controller since I want to monitor if somebody from the Domain Admins group logs on to the computer. If you monitor a built-in group (e.g. Administrators) then you will see that the SID is much shorter and the same across all your computers.

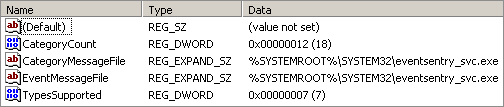

Now that we know the SID, we can specify it in the registry. Navigate to key HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\Lsa\Audit and create a new String with the name SpecialGroups.

The value for this new string will be the SID of the group you want to monitor, and you can separate multiple SIDs with a semicolon. For example:

S-1-5-32-544;S-1-5-32-123-54-65

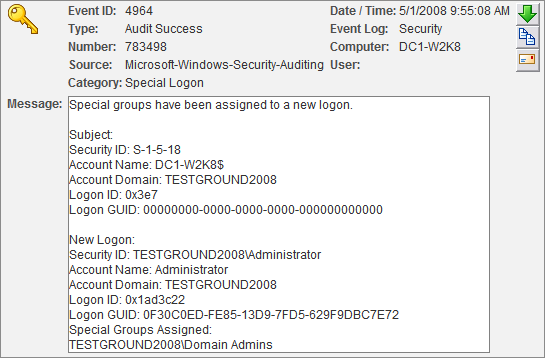

You do not have to reboot after making this change, it is effective immediately with the first subsequent login. The event that is being logged will look similar to this (screen shot from the EventSentry Web Reports):

The relevant information is shown in the lower part of the event in the New Logon section. Security ID shows the user that logged on, and Special Groups Assigned shows the group the account is a member of (of course this group has to be specified in the registry).

The relevant information is shown in the lower part of the event in the New Logon section. Security ID shows the user that logged on, and Special Groups Assigned shows the group the account is a member of (of course this group has to be specified in the registry).

Voila. This feature probably makes most sense on critical servers, though I would recommend enabling it on all workstations as well since you probably want to know if a member of the local Administrators group logs on. But of course this also means that you need to be running Vista on your network :-).

Since this feature needs to be activated using the registry, you can use AutoAdministrator to push this registry change to multiple computers. AutoAdministrator has actually been rewritten from scratch and we will be releasing a new version 2.0 very soon.