Passwords are everywhere. You use them to log on to your network, login to business applications and Facebook, check your personal email, and more.

I’ll be rethinking passwords in this blog, and what you can do to make authenticating with passwords more secure.

As it turns out, the British comedian Nick Helm won an award in Edinburgh for the funniest joke, just one day before I posted this article. He won for the joke: “I needed a password eight characters long so I picked Snow White and the Seven Dwarves.”

Of course, passwords have been around for a while, even though more advanced ways to authenticate like fingerprint readers and biometric scans exist today. Still, passwords prevail as the primary method to authenticate for the majority of networks and computer systems. One-time pads like RSA’s SecurID are another secure alternative, but any system can be exploited as recent events have shown. Even fingerprint readers can be fooled: either with brainpower or through more “traditional” methods.

Password Cracking goes Mainstream

By 1999, Windows NT 4.0 started gaining a lot of traction in networks across the globe. Of course we all know that with popularity comes quantity, and with quantity comes

increased exposure. As more and more networks were using Windows NT (to authenticate among other things), a new piece of software called l0phtcrack (it had a GUI!) was gaining popularity. What l0phtcrack could do – and quite easily I might add – was download all password hashes from the Windows NT user database, and then run both brute-force and dictionary attacks on those hashes.

If a user chose a password that was in the English dictionary, l0phtcrack could often crack it within seconds. If the password was a bit more complicated, it would take a couple of days. Due to the way Windows NT stored password hashes (in the name of compatibility with LanManager), passwords with 7 or fewer characters were particularly easy to crack. And, as CPUs grew

stronger and faster, the time required to run those brute-force attacks kept getting shorter. Of course this general mechanism is and was not restricted to Windows NT and l0phtcrack; you could do the same thing with any password hash. For example, I used a Perl script (utilizing a dictionary text file) back in 2001 against hashes obtained from our NIS system to show the UNIX admins that the NIS installation was, politely speaking, insecure.

So choosing a password that is in any dictionary is clearly not a good idea (and really shouldn’t be allowed when setting the password) since a dictionary attack can be fast. An easy way to prevent against a simple dictionary attack is to require users to choose an additional non-letter character. Since words in dictionaries usually don’t contain characters other than letters,

this is certainly a step in the right direction.

Secure Password for Dummies

Technically, adding a single non-letter character to an English word would indeed prevent a dictionary attack. Yes, a bad one! A persistent and motivated attacker (and most attackers are persistent and motivated) could modify their dictionary attack, and automatically prepend and append numbers to dictionary words, so that a password like “house7” or even

“house1!” could still be found. This may sound like a lot of work, after all this would increase the time a dictionary attack takes around 20-fold “0house, 1house … house0, house1, ….house9”. True, but dictionary attacks are so fast that this technique would still be preferable to a brute-force attack. An attacker would still prefer a 4-hour dictionary attack over a 2-month brute-force attack (I made those numbers up, but the idea is that dictionary attacks are a lot faster). It also turns out that users tend to use the same numbers / special characters in their passwords, e.g. “1!”, “99”, “123” and so forth. Even worse, there appear to be a set of “favorite” passwords: http://www.schneier.com/blog/archives/2006/12/realworld_passw.html.

The obvious way to protect against a dictionary attack is to not use words from a dictionary in the first place. Indeed, many authentication systems require the use of letters with uppercase/lowercase, numbers as well as special characters. A requirement like this will surely protect us against even the most sophisticated dictionary attacks. Mission

accomplished. Easy!

Not so fast. Attackers still have a few more options at their disposal. The attacker can:

- Look for software vulnerabilities so that they

can inject their own malware that would give them access to the

server/workstation/network. They would then simply create a new user, reset the

password of an existing user, or – if possible – just download whatever data

they need. Event Log Monitoring can help here, since you can get notified when a new user is created/deleted or

a password is changed (you could setup a filter to email you when a user

password is changed between 11pm and 6am for example). - Employ social engineering techniques to get

access to the password, either through physical access, a phone call or

something similar. A combination of (1) & (2) is most common, as an attacker will

send a malformed PDF (or similar) to the target, which will then implant some

Trojan horse. - Use a brute-force attack to guess the password,

either against an offline database (if the attacker was lucky enough to obtain

one), or run the attack directly against the login system (a web site, a

Windows domain, etc.). Owasp has a good article about brute-force attacks against

web sites, which can be very susceptible to these types of attacks.

Brute-force attacks generally only work in two cases:

- One has access to an encrypted password database.

- The system one is trying log on to does not

employ an account-lockout technique, so that a brute-force attack can be aimed

directly at a logon portal.

Most network systems do employ an account lockout mechanism, and I highly recommend you enable this on systems which support it. Many systems, in particular web sites, do not support this functionality, however, so brute-force attacks are still a real risk.

When enabling account lockout, it’s important to keep your end users in mind. Your users will ultimately need to log on to a network in order to do their work, and if the system locks them out every time they type in a wrong password twice, then your support team will spend a lot of time unlocking user accounts, and your users (depending on how calm they are)

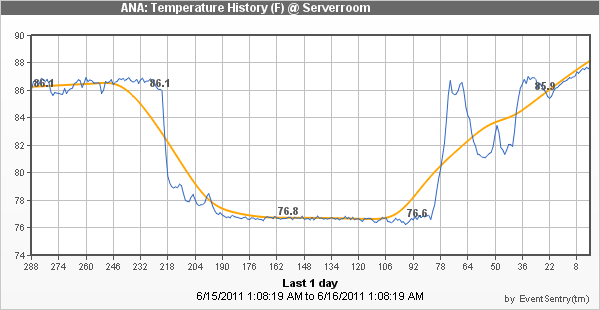

will be more or less annoyed. A log monitoring solution like EventSentry can email you when an account lockout occurs on a system (e.g. on Windows through the event log, on other devices through Syslog).

All this begs the ultimate question: Is it better to use a short complex password like C0mP1eX! (8 characters), or a long more simple password like ClimbingUpATree (15 characters)? Time to bring out the calculator. In order to come up with a conclusion, we’ll create three password policies: One that requires complex but shorter passwords, and two with longer but less

complex passwords.

Password Policy 1:

“Complex Is Best”

Minimum length: 8 characters

Required character groups: One lowercase letter, one uppercase letter, one number, one special character out of: !@#$%^&*()_+[]{}

Possible passwords: 1,370,114,370,683,140 (yes, that’s one quadrillion)

That’s mighty complex, but a password like C0mp1ex! would be valid.

Password Policy 2:

“A little long is enough”

Minimum length: 10 characters

Required character groups: One lowercase letter, one uppercase letter, one number. Special characters are allowed but not required.

Possible passwords: 839,299,365,868,340,000 (that’s 839 quadrillion and a little bit)

Of course there would be even more possible passwords if a user decides to include a special character in their password (after all the policy only specifies the minimum requirement, and we wouldn’t dare prohibit additional complex characters now, would we?). This policy is 612 times more complex than the previous policy, even though it

only requires two more characters. It’s flaw, however, is that a user could potentially use insecure passwords like Gardenhose1 which could be guessed with a sophisticated dictionary attack.

Password Policy 3:

“The longer, the better”

Minimum length: 15 characters

Required character groups: One lowercase letter, one uppercase letter

Possible passwords: 54,960,434,128,018,700,000,000,000 (that’s 54 septillions, 960 sextillions, 434 quintillions – you get the idea)

Phew, you need a lot of GPUs and a time machine to brute-force a password from that selection – and that’s without even requiring a user to include a number! This policy is 40 billion (40,117,105,202 to be exact) times more complex than the first policy. And long passwords are not hard to come up with – just use a simple sentence like “Idontlikepasswords55″ is a pretty long password (20 characters) and not that hard to remember at all.

A compromise?

As you can see, length trumps complexity in most cases, but as is often the case in computer security, things aren’t always as simple as they seem. The numbers are correct, but a longer password without complex requirements might, as mentioned before, encourage a user to choose a password that could be guessed with a sophisticated dictionary attack.

For example, “Gardenhose1” would match the 2nd policy’s requirements but not be very secure. Users also tend to use family names, user names and the like in their passwords. A smart attacker could leverage this and adapt their dictionary attack accordingly. So if “Jean Reno” was to use “JeanReno1948” as his password, then this would still not be as secure as assumed – despite the 12-character length.

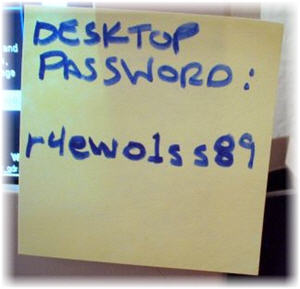

We can see that every additional character in the length of a password increases the possible combinations exponentially, more so than a few additional special characters. Still, not requiring special characters at all might allow the end user to pick passwords that are in a dictionary. Requiring extremely complicated passwords, on the other hand, will make it difficult for many users to remember them, and your end users might resort to writing their passwords down on a post-it, the bottom of their keyboard, or come up with “secure” passwords like ASDFasdf1!. Yes, annoyed users can be very creative. Put yourself in the shoes of somebody who is not familiar with security and needs to choose a password, would you voluntarily choose something like T3a#fE@8 ?

- Cats2010!

- Cats2011!

or

- Q1cats2011!

- Q2cats2011!

This is heaven for an attacker: as long as the user sticks to the same pattern, the attacker will always know the password – even if the user changes it every 3 months. See “Changing Passwords” (http://www.schneier.com/blog/archives/2010/11/changing_passwo.html) for a more thorough discussion on this topic.

So what’s the solution? As often, probably a little bit of everything. Dictionary passwords need to be avoided like the plague, so we’ll never get around requiring some complexity. Complexity alone can be misleading though, so a minimum length of 12 characters seems like a good baseline. In addition, enable account lock-out techniques and set a (reasonable) maximum password age. A pretty good password policy would look like this:

- 18 characters minimum

- Lowercase, uppercase & numbers

- 180-day password age

- No part of first name, last name, username, etc. allowed in password

Also, don’t forget to educate your users, so that people know why and how. Tell them that corporate espionage is a real threat, and suggest the use of a sentence for a password. Of course there will always be naysayers, but the majority of your user base should understand this.

Abuse

I have seen web sites (e.g. banking) require me to use a complex password, yet require that it shall be no longer than 8 characters! Whatever the reason behind something like that, it’s far from secure and counterproductive. Even if I’d want to choose a strong 14-letter password I couldn’t, and I would have to settle for something less secure.

Password Reuse

Another often overlooked risk is the reuse of passwords. Nowadays, people are required to use passwords at a multitude of web sites and systems. Some of those web sites store confidential information (SSN, credit card), but many don’t.

The more often one uses the same password, the higher the risk that it is compromised. As such, your password – if used at more than one place – is only as strong as its weakest link. Don’t use the same password that you use for your banking web site on your photo-sharing site!

I personally don’t care too much if some cracker hacks the photo-sharing site I use, and downloads (and cracks) all the passwords. But I do care if my password to my banking web site is compromised. An attacker may not be able to easily guess a password at bankofamerica.com, but if I use the same password as my photo-sharing site, then I’m just asking for trouble. Recycling and reuse are a good thing – but not with passwords.

I hope this longer than expected article inspired you to review your corporate password policy, and maybe even your personal password habits. If you made it this far then I have included some relevant links regarding … well … passwords!

Nick Helm’s password joke:

http://www.bbc.co.uk/news/uk-scotland-14646532

Interesting Statistics:

http://www.passwordresearch.com/stats/statindex.html

A Strong Password Isn’t the Strongest Security:

http://www.nytimes.com/2010/09/05/business/05digi.html