If you live in an English-speaking country like the United States, United Kingdom or Australia, then you are in the lucky position where every character in your language can be represented by the ASCII table. Many other languages aren’t as lucky unfortunately, and it is no surprise given the fact that over 1000 written languages exist. Most of these languages cannot be interpreted by ASCII, most notably Asian and Arabic languages.

Understanding UNICODE is no easy feat however – just the mere abbreviations out there can be mind-boggling: UTF-7, 8, 16, 32, UCS-2, BOM, BMP, code points, Big-Endian, Little-Endian and so forth. UNICODE support is particularly interesting when dealing with different platforms, such as Windows, Unix and OS X.

It’s not all that bad though, and once the dust settles it can all make sense. No, really. As such, the purpose of this article is to give you a basic understanding of UNICODE, enough so that the mention of the word UNICODE doesn’t give you cold shivers down your back.

Unicode is essentially one large character set that includes all characters of written languages, including special characters like symbols and so forth. The goal – and this goal is reality today – is to have one character set for all languages.

Back in 1963, when the first draft of ASCII was published, Internationalization was probably not on the top of the committee member’s minds. Understandable, considering that not too many people were using computers back then. Things have changed since then, as computers are turning up in pretty much every electrical device (maybe with the exception of stoves and blenders).

The easiest way to start is, of course, with ASCII (American Standard Code for Information Interchange). Gosh were things simple back in the 60s. If you want to represent a character digitally, you would simply map it to a number between 1 and 127. Voila, all set. Time to drive home in your Chevrolet, and listen to a Bob Dylan, Beach Boys or Beatles record. I won’t go in to the details now, but for the sake of completeness I will include the ASCII representation of the word “Bob Dylan”:

String: B o b D y l a n

Decimal: 66 111 98 32 68 121 108 111 110

Hexadecimal: 0x42 0x6F 0x62 0x20 0x44 0x79 0x6C 0x6F 0x6E

Binary: 01000010 01101111 01100010 00010100

01000100 01111001 01101100 01101111 01101110

Computers, plain and simple as they are, store everything as numbers of course, and as such we need a way to map numbers to letters, and vice versa. This is of course the purpose of the ASCII table, which tells our computers to display a “B” instead of 66.

Since the 7-bit ASCII table has a maximum of 127 characters, any ASCII character can be represented using 7 bits (though they usually consume 8 bits now). This makes calculating, how long a string is for example, quite easy. In C programs for example, ASCII characters are represented using chars, which use 1 byte (=8 bits) of storage. Here is an example in C:

char author[] = “The Beatles”;

int authorLen = strlen(author); // authorLen = 11

size_t authorSize = sizeof(author); // authorSize = 12

The only reason the two variables are different, is because C automatically appends a 0x0 character at the end of a string (to indicate where it terminates), and as such the size will always one char(acter) longer than the length.

So, this is all fine and well if we only deal with “simple” languages like English. Once we try to represent a more complex language, Japanese for example, things start to get more challenging. The biggest problem is the sheer number of characters – there are simply more than 127 characters in the world’s written languages. ASCII was extended to 8-bit (primarily to accommodate European languages), but this still only scratches the surface when you consider Asian and Arabic languages.

Hence, a big problem with ASCII is that is essentially a fixed-length, 8-bit encoding, which makes it impossible to represent complex languages. This is where the Unicode standard comes in: It gives each character a unique code point (number), and includes variable-length encodings as well as 2-byte (or more) encodings.

But before we go to deep into Unicode, we’ll just blatantly pretend that Unicode doesn’t exist and think of a different way to store Japanese text. Yes! Let us enter a world where every language uses a different encoding! No matter what they want to make you believe – having countless encodings around is fun and exciting. Well, actually it’s not, but let’s take a look here why.

The ASCII characters end at 127, leaving another 127 characters for other languages. Even though I’m not a linguist, I know that there are more than 127 characters in the rest of the world. Additionally, many Asian languages have significantly more characters than 255 characters, making a multi-byte encoding (since you cannot represent every character with one byte) necessary.

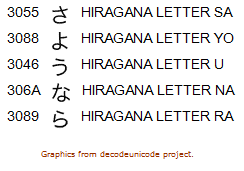

This is where encodings come in (or better, “came” in before Unicode was established), which are basically like stencils. Let’s use Japanese for our code page example. I don’t speak Japanese unfortunately, but let’s take a look at this word, which means “Farewell” in Japanese (you are probably familiar with pronunciation – “sayōnara”):

The ASCII table obviously has no representation for these characters, so we would need a new table. As it turns out, there are two main encodings for Japanese: Shift-JIS and EUC-JP. Yes, as if it’s not bad enough to have one encoding per language!

So code pages serve the same purpose as the ASCII table, they map numbers to letters. The problem with code pages – opposed to Unicode – is that both the author and the reader need to view the text in the same code page. Otherwise, the text will just be garbled. This is what “sayōunara” looks like in the aforementioned encodings:

EUC-JP

0xA4 B5 A4 E8 A4 A6 A4 CA A4 E9

Shift_JIS

0x82 B3 82 E6 82 A4 82 C8 82 E7

Their numerical representation between EUC-JP and Shift_JIS is, as is to be expected, completely different – so knowing the encoding is vital. If the encodings don’t match, then the text will be meaningless. And meaningless text is useless.

You can imagine that things can get out of hand when one party (party can be an Operating System, Email client, etc.) uses EUC-JP, and the other Shift_JIS for example. They both represent Japanese characters, but in a completely different way.

Encodings can either (to a certain degree) be auto-detected, or specified as some sort of meta information. Below is a HTML page with the same Japanese word, Shift_JIS encoded:

<HTML>

<TITLE>Shift_JIS Encoded Page</TITLE>

<META HTTP-EQUIV=”Content-Type” CONTENT=”text/html; charset=Shift_JIS”>

<BODY>

さようなら

</BODY>

</HTML>

You can paste this into an editor, save it has a .html file, and then view it in your favorite browser. Try changing “Shift_JIS” to “EUC-JP”, fun things await you.

But I am getting carried away, after all this post is about Unicode, not encodings. So, Unicode solves these problems by giving every character from every language a unique code point. No more “Shift_JIS”, no more “EUC-JP” (not even to mention all the other encodings out there), just UNICODE.

Once a document is encoded in Unicode, specifying a code page is no longer necessary – as long as the client (reader) supports the particular Unicode encoding (e.g. UTF-8) the text is encoded with.

The five major Unicode encodings are:

UTF-8

UCS-2

UTF-16 (an extension of UCS-2)

UTF-32

UTF-7

All of these encodings are Unicode, and represent Unicode characters. That is, UTF-8 is just as capable as UTF-16 or UTF-32. The number in the encoding name represents the minimum number of bits that are required to store a single Unicode code point. As such, UTF-32 can potentially require 4 x as much storage as UTF-8 – depending on the text that is being encoded. I will be ignoring UTF-7 going forward, as its use is not recommended and it’s not widely used anymore.

The biggest difference between UTF-8 and UCS-2/UTF-16/UTF-32 is that UTF-8 is a variable length encoding, opposed to the others being fixed-length encodings. OK, that was a lie. UCS-2, the predecessor of UTF-16, is indeed a fixed length encoding, whereas UTF-16 is a variable length encoding. In most use cases however, UTF-16 uses 2 bytes and is essentially a fixed length encoding. UTF-32 on the other hand, and that is not a lie, is a fixed-length encoding that always uses 4 bytes to store a character.

Let’s look at this table which lists the 4 major encodings and some of their properties:

| Encoding | Variable/Fixed | Min Bytes | Max Bytes | |

| UTF-8 | variable | 1 | 4 | |

| UCS-2 | fixed | 2 | 2 | |

| UTF-16 | variable | 2 | 4 | |

| UTF-32 | fixed | 4 | 4 |

What this means, is that in order to represent a Unicode character (e.g. さ), a variable length encoding might require more than 1 byte, and in UTF-8’s case up to 4 bytes. UTF-8 needs potentially more bytes, since it maintains backward-compatibility with ASCII, and as such loses 7 bits.

Windows uses UTF-16 to store strings internally, as do most Unicode frameworks such as ICU and Qt‘s QString. Most Unixes on the other hand use UTF-8, and it’s also the most commonly found encoding on the web. Mac OSX is a bit of a different beast; due to it using a BSD kernel, all BSD system functions use UTF-8, whereas Apple’s Cocoa framework uses UTF-16.

UCS-2 or UTF-16

I had already mentioned that UTF-16 is an extension of UCS-2, so how does it extend it and why does it extend it?

You see, Unicode is so comprehensive now that it encompasses more than what you can store in 2 bytes. All characters (code points) from 0x0000 to 0xFFFF are in the “BMP“, the “Basic Multilingual Plane”. This is the plane that uses most of the character assignments, but additional planes exist, and here is a list of all planes:

• The “BMP”, “Basic Multilingual Plane”, 0x0000 -> 0xFFFF

• The “SMP”, “Supplementary Multilingual Plane”, 0x10000 -> 0x1FFFF

• The “SIP”, “Supplementary Ideographic Plane”, 0x20000 -> 0x2FFFF

• The “SSP”, “Supplementary Special-purpose Plane”, 0xE0000 -> 0xEFFFF

So technically, having 2 bytes available is not even enough anymore to cover all the available code points, you can only cover the BMP. And this is the main difference between UCS-2 and UTF-16, UCS-2 only supports code points in the BMP, whereas UTF-16 supports code points in the supplementary planes as well, through something called “surrogate pairs“.

Representation in Unicode

So let’s look at the above sample text in Unicode, shall we? Sayonara Shift_JIS & EUC-JP! The site http://rishida.net/tools/conversion/ has some great online tools for Unicode, one of which is called “Uniview“. It shows us the actual Unicode code points, the symbol itself and the official description:

The official Unicode notation (U+hex) for the above characters uses the U+ syntax, so for the above letters we would write:

The official Unicode notation (U+hex) for the above characters uses the U+ syntax, so for the above letters we would write:

U+3055 U+3088 U+3046 U+306A U+3089

With this information, we can now apply one of the UTF encodings to see the difference:

UTF-8

E3 81 95 E3 82 88 E3 81 86 E3 81 AA E3 82 89

UTF-16

30 55 30 88 30 46 30 6A 30 89

UTF-32

00 00 30 55 00 00 30 88 00 00 30 46 00 00 30 6A 00 00 30 89

So UTF-8 uses 5 more bytes than UCS-2/UTF-16 to represent the same exact characters. Remember that UCS-2 and UTF-16 would be identical for this text since all characters are in the BMP. UTF-32 uses yet 5 more bytes then UTF-8 and would be require the most storage space, as to be expected.

What you can also see here, is that UTF-16 essentially mirrors the U+ notation.

Fixed Length or Variable Length?

Both encoding types have their advantages and disadvantages, and I will be comparing the most popular UTF encodings, UTF-8 and UCS-2, here:

Variable Length UTF-8:

• ASCII-compatible

• Uses potentially less space, especially when storing ASCII

• String analysis/manipulation (e.g. length calculation) is more CPU-intensive

Fixed Length UCS-2:

• Potentially wastes space, since it always uses fixed amount of storage

• String analysis/manipulation is usually less CPU intensive

Which encoding to use will depend on the application. If you are creating a web site, then you should probably choose UTF-8. If you are storing data in a database however, then it will depend on the type of strings that will be stored. For example, if you are only storing languages that cannot be represented through ASCII, then it is probably better to use UCS-2. If you are storing both ASCII and languages that require Unicode, then UTF-8 is probably a better choice. An extreme example would be storing English-Only text in a UCS-2 database – it would essentially use twice as much storage as an ASCII version, without any tangible benefits.

One of the strongest suits of UTF-8, at least in my opinion, is its backward compatibility with ASCII. UTF-8 doesn’t use any numbers below 127 (0x7F), which are – well – reserved for ASCII characters. This means that all ASCII text is automatically UTF-8 compatible, since any UTF-8 parser will automatically recognized those characters as being ASCII and render them appropriately.

The BOM

And this brings us to the next topic – the BOM (header). BOM stands for “Byte Order Mark”, and is usually a 2-4 byte long header in the beginning of a Unicode text stream, e.g. a text file. If a text editor does not recognize a BOM header, then it will usually display the BOM header as either the þÿ or ÿþ characters.

The purpose of the BOM header is to describe the Unicode encoding, including the endianess, of the document. Note that a BOM is usually not used for UTF-8.

Let’s revisit the example from earlier, the UTF-16 encoding looked like this:

30 55 30 88 30 46 30 6A 30 89

If we wanted to store this text in a file, including a BOM header, then it could look also look like this:

FF FE 55 30 88 30 46 30 6A 30 89 30

“FF FE” is the BOM header, and in this case indicates that a UTF-16 Little Endian encoding is used. The same text in UTF-16 Big Endian would look like this:

FE FF 30 55 30 88 30 46 30 6A 30 89

The BOM header is generally only useful when Unicode encoded documents are being exchanged between systems that use different Unicode encodings, but given the extremely little overhead it certainly doesn’t hurt to add it to any UTF-16 encoded document. As such, Windows always adds a 2-byte BOM header to all Unicode text documents. It is the responsibility of the text reader (e.g. an editor) to interpret the BOM header correctly. Linux on the other hand, being a UTF-8 fan and all, does not need to (and does not) use a BOM header – at least not by default.

Tools & Resources

There are a variety of resources and tools available to help with Unicode authoring, conversions, and so forth.

A nifty online converter that I already mentioned earlier can be found at http://rishida.net/tools/conversion/, and also check out UniView: http://rishida.net/scripts/uniview/.

The official Unicode website is of course a great resource too, though potentially overwhelming to mere mortals that only have to deal with Unicode occasionally. The best place to start is probably their basic FAQ: http://www.unicode.org/faq/basic_q.html.

I hope this provides some clarification for those who know that Unicode exists, but are not entirely comfortable with the details.

さようなら!